Is Simulation Theory Possible?

Simulation Theory is the concept that we are living in a Simulation. Part of the motivation for the theory comes from the fact that the universe and its fundamental physical constants seem to be very fine tuned for life, or at least the types of life of which we can conceive. And the universe is insanely fine-tuned for life, as best we can tell.

The only self-consistent philosophical categories of answers to this Fine Tuning Problem are:

The Universe is designed by some Intelligence that predates the Universe, like God or something like God.

There are billions of trillions of quadrillions of different “multiverses,” and each of those can have different values of physical constants. We happen to find ourselves in a finely tuned universe because we can’t find ourself in a poorly -tuned one where life can’t exist.

We are living in a computed Simulation of reality that someone set up for whatever reason. They had to make the Universe be (or seem?) finely tuned in order to “support” life, or at least to make us think it does.

As for the first two, there is much debate. One either invokes a very powerful Intelligence that can do anything or an infinite array of universes that can be anything. There is much debate about evidence of God, and so far there is zero evidence for a multiverse (unless you count the Fine Tuning).

Simulation Theory has one philosophical benefit that boosted it to prominence almost 20 years, put forth by Nick Bostrom: If a society ever becomes capable of creating hyper-realistic simulations with intelligent agents inside it, then they would likely create these simulations, and probably more than one of them. Also, if one were to let these simulations run for long enough, those simulated intelligent agents would also likely eventually “invent” hyper-realistic simulation technology, and they would likewise probably run multiple simulations. And the simulations inside the simulation would also….you get the point.

A single real universe with such simulations would spawn a humongous hierarchy of nested simulations, producing a huge total number of simulations. If that is the case, and those simulations are indistinguishable from reality, then the chances that we are in the one real reality instead of being inside one of the multitudinous simulated realities is diminishingly small. Thus, we are very likely living in a simulation (or so the theory goes).

The almost mathematical logic of this argument was very compelling, and it likewise provided an explanation for fine tuning that didn’t invoke God or a ridiculous number of real universes, for those that didn’t prefer either or both of those ideas (very, very few humans believe things they would rather not believe, regardless of evidence or lack thereof).

But we understand the physics of how the world works pretty well, and we are also beginning to understand computation pretty well. Thus, we can actually test the feasibility of the Simulation Hypothesis. And I intend to do so in new ways.

But first, let’s make sure we are Steel-Manning the Simulation Hypothesis. By which I mean, we want to describe the strongest, best-thought-out version possible (the opposite of Straw-Manning).

Many have an initial reaction that it would take a computer the size of a Universe to simulate a hyper-realistic Universe. As this would make sufficiently realistic simulations unlikely, here are several important ways to reduce the computation that is needed:

A) Vary the simulation fidelity over distance. The further any human is away from an object, the lower-fidelity its simulation needs to be.

B) Vary the simulation fidelity over time. Things that built up the past can be computed at low fidelity or even made up from whole cloth, if reasonable.

C) Use compression. This is just how A or B is done. If you have a cubic meter of water resting near the bottom of the Mariana trench, you might not need to simulate every atom. You can probably use just a few numbers to describe that blob of water instead and calculate how those few numbers change over time. You can apply that to the earth’s core or a cloud on Neptune as well. You can do this in time as well, varying the time resolution. The orbit of Mercury might only need to be updated every microsecond, not every trillionth of a nanosecond.

But how much computation can a Universe-sized computer accomplish per second? The following paragraph may or may not cause your eyes to glaze over, but the paragraph after that has the answer.

The Landauer limit tells us that there is a fundamental limit (based on laws of thermodynamics) to the smallest amount of energy it can possibly take to flip a bit, which is the smallest and most fundamental computation possible. This smallest amount of energy possible to flip a bit is actually also a function of the temperature of the computer holding the bit, because changing a bit increases entropy. And the amount of entropy change is directly tied to the temperature of the cold heat sink the computer is connected to. (It would be room temperature for your laptop, because the fan is using the room’s air to cool the processor). Landauer’s equation for the tiniest amount of energy required to flip a bit is:

where kB is a constant, T is the temperature of the computer’s heat sink, and “ln 2” is the natural logarithm of 2, which is about 0.693. So the colder the heat sink, the less energy it takes to flip a bit in a maximally energy-efficient computer. For room temperature computers, this translates to about 2.8 zeptoJoules per bit flip. (Zepto- mean one sextillionth, or 10^-21 of something).

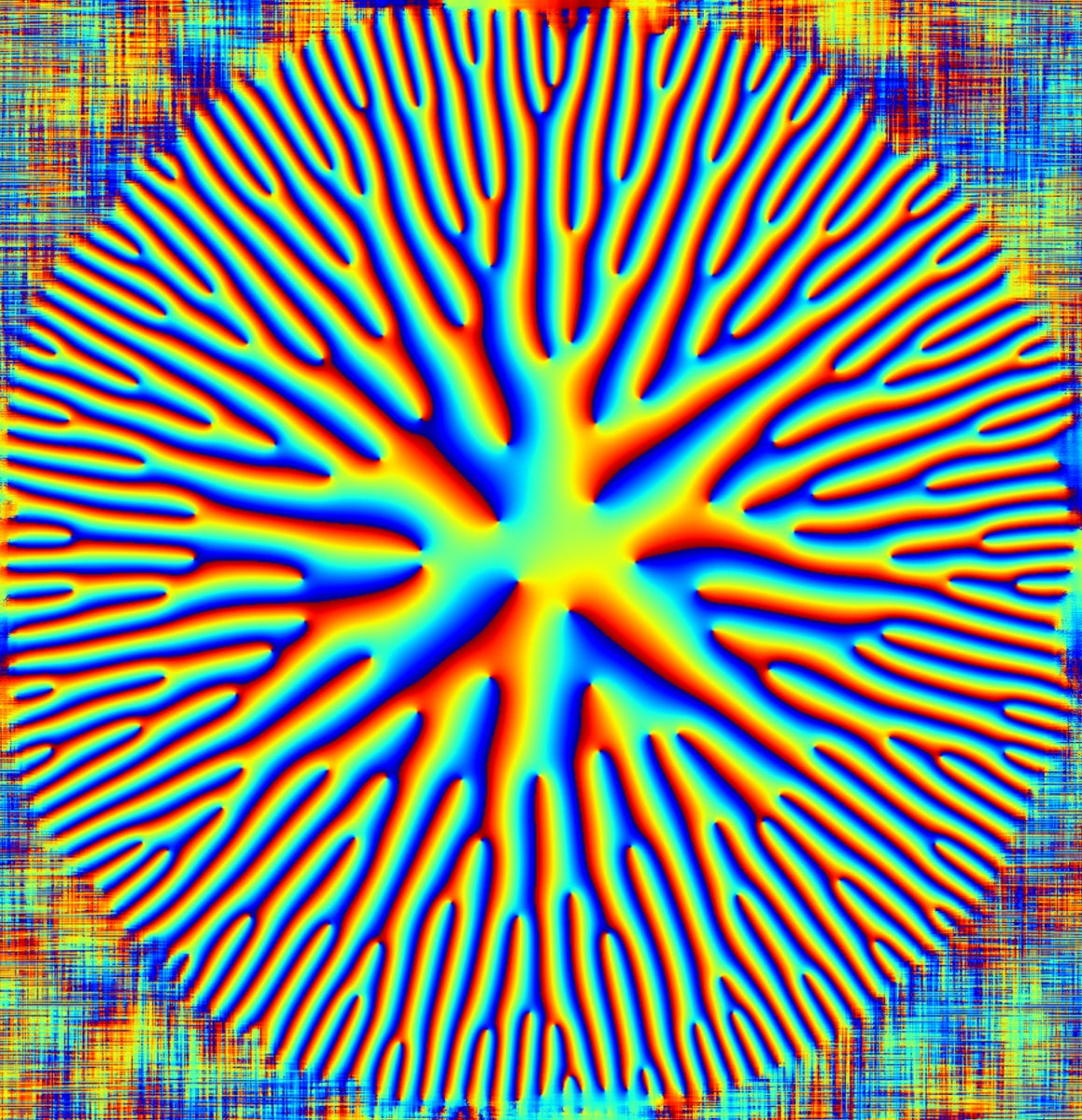

For a computer the size of the universe (using every atom in the universe), the coldest heat sink is the temperature of background space, the electromagnetic radiation bath of the Cosmic Microwave Background. Currently, that is at 2.7 degrees above absolute zero. If we take all the matter of the universe and smash it all together in one place and turn that (magically?) into a maximally efficient computer, then Seth Lloyd has computed that 10^90 calculations per seconds can be performed using all the energy in the Universe.

But here we have a big problem. That’s not enough computation to fully simulate even just one human.

Quantum particles interact with each other such that there is an exponential increase in computation needed to handle increasing numbers of particles. In a group of 150 atoms that are all interacting with each other (like in a section of DNA in the nucleus of a cell), the number of interactions is at least 4^150, because there are 4 fundamental particle properties that deeply matter for particle interactions (charge, mass, spin, and momentum). And this is actually much simpler than our true quantum reality. This alone would require 2x10^90 calculations. This crashes our Universe computer even if we only fully simulate these atoms once per second. But quantum interactions occur on the picosecond timeframe, which means we need to do 2x10^105 calculations per second.

For 150 strongly interacting atoms.

And we have ignored all the internal complexity of each atom.

This already bumps up against one of our main observables: when humans deeply study strong quantum interactions in particle accelerators or Bose-Einstein condensates, they are probing this framework, and they see a consistency that matches theory. This consistency would not be possible on the granular level of those experiments if computational shortcuts were taken. Bose-Einstein condensates (large collections of ultra-cold atoms who smear out and act as a single atom in some ways) would form very differently from theory.

For instance, in particle accelerators, if the timeframe were less than fractions of picoseconds in the simulation world, protons travelling near the speed of light would not interact properly due to them phasing past each other between “clock ticks.” Chemical reactions of catalysts would also be extremely strongly affected by simulation shortcuts.

This means that the first two possible paths of simulation can only work if the A) and B) fidelity changes are only made when scientists are looking carefully at quantum systems.

We have other problems. Photosynthesis appears to be a strongly quantum phenomenon. The rate of photosynthesis would drastically change if we reduced the spatial or time fidelity of the simulation.

But wait! Equations are the ultimate compression algorithm. We have equations that can be used to calculate photosynthesis rates, how Bose-Einstein Condensates should act, and even to describe how a hamburger caramelizes and turns brown on a grill. What if the simulation uses equations to model the world, vastly reducing the computational load?

Well, first of all, the equations of Quantum Mechanics don’t lend themselves to computability. The quantum mechanic equations don’t describe the emergent properties of quantum particles; they describe the evolution over space and time of each particle, and must be step-wise calculated. All the examples I gave above of how we need 10^105 computations per second in order to simulate just 150 atoms is using those equations. And while we can probably simulate likely photosynthesis at the leaf-level using equations, there is something else a Simulation cannot do:

A Simulation at lower-than-real fidelity cannot create believable historical records of near-stochastic quantum-influenced events.

This is the key innovation of thought in this article. I assert that if one has an asteroid spinning in space, it could one day be captured by astronauts.

These astronauts can take it home and scientists can slice it in half. When scientists view the sliced section in a microscope, they may see the tiny tracks left in some crystals in the asteroid by cosmic rays. Cosmic rays are very energetic particles that come from all directions in the galaxy, but are somewhat shielded by the sun’s magnetic field. Thus, there is some historical prevalence of the direction of cosmic rays over time.

But the asteroid has been tumbling and banging around for most of the age of the solar system, more than 4 billion years.

Unless one were running the simulation at the highest fidelities this entire time, these cosmic ray tracks would not be consistent with a full time-history of the universe. This is because the origin of cosmic rays and how they interact with asteroids is fundamentally quantum in nature. There are no simplifying equations that would allow for this historical record of near random cosmic ray flux to be calculated well and consistently across space and time.

Now, randomness could simply be inserted, but there would be no consistency in the historical record, because randomness does not always average out. Careful enough observations of enough asteroids or enough sections of the same asteroid would reveal the ruse of lower-fidelity simulation by showing that careful statistical quantities don’t match up. For instance, average (faked) flux directions from very high energy events differing across the length of a single asteroid would destroy the illusion of reality. And as we have shown, you either have to reduce resolution, or the Simulation is completely infeasible for quantum mechanical systems.

The same is true for many other types of quantum-sensitive historical records, including coherent reception of light and neutrinos simultaneously from ancient supernovas. These simply cannot be easily simulated because it requires quantum-level simulation across vast periods of time and space.

And this stabs at the heart of Simulation Theory.

We have been able to detect such discrepancies in a few different places for decades now.

And in most Simulations, perhaps all Simulations, the intelligent agents cannot know they are in a Simulation. In any simulation of intelligent agents, the agents' knowledge of their world is a key parameter influencing behavior.

Small changes in world apprehension have very large impacts on motivation, goal determination, and even mental state.

For instance, think of how knowledge of germ theory impacted the entire field of medicine. It was a huge impact, and it caused behavior and results to exponentially diverge from the case where knowledge of germ theory didn't exist.

In a similar manner, our knowledge of the Universe being a Simulation would DRASTICALLY change how and why we do things as individuals and a species.

Almost no other nugget of knowledge could have a bigger effect on the progression of humanity than the knowledge that we are in a Simulation.

No rational Simulators would allow such an idea to gain purchase in their artificial reality.

This, the very existence of Simulation Theory (and it being taken seriously by some) disproves Simulation Theory. Or at least shows it extremely unlikely.

So here is what I assert:

We live in a world where we now have the ability to detect simulation artifacts.

Detecting and thus knowing we are in a Simulation almost definitely ruins the Simulation.

Ruining the Simulation would cause it to be ended.

The world has not ended.

So we are not living in a Simulation.

Of course, maybe just having a few people understand that we are living in a Simulation would not ruin it. But if people did see this reasoning and really began investigating some of those stochastic quantum records, then they would be able to fully detect the artifacts of the Simulation, and this may become widely known.

So maybe it’s better if I don’t publish this arti—

As to the self-consistent philosophical stances to the Fine Tuning Problem, your #s 1 and 3 are identical. God and the Simulation Programmers are one and the same. Simulation "Theory" is simply a restatement of Augustine's (or al-Ghazali's) arguments for the existence of God, and Augustine did it better than the half-baked moderns. In my observations, Simulation "Theory" is a way for middle aged men still in love with the fashionable atheism of their adolescence to putatively hold onto that atheism while simultaneously and only semi-consciously admitting it has failed. Simulationism is also a leading indicator that the aggressive philosophical materialism of the Post-Enlightenment era is failing.

The turtles all the way down nature of Simulationism also refutes it. As you derive in great mathematical depth. A bit too much perhaps IMO, more on that in a bit. No matter what, Simulationism must eventually collapses back down to a core base reality of physical substrate. So why assume we are not that core base reality of substrate? The only advantage to assuming we are the Simulation is that it inflates our egos in ways Gnostic, "luminous beings are we" to quote Master Yoda, and Gnostic obsessions are a hallmark of this late and failing age of philosophical materialism. Also, there is no way to assume the clock ticks or cosmic rays you use as metrics of measurement in our reality ARE accurate if our simulation is a Simulation in the style of al-Ghazali, where all is shaped based on the arbitrary and capricious albeit loving will of God aka Programmer aka God. To simply assume measurements are reliable is to credit the perspectives of Augustine a priori. IOW if we live in al-Ghazali's reality, your entire mathematical digression fails, while the more basic critiques hold up.

And again, Augustine and Aquinas did the whole "creation is an ACTUAL product of God aka Programmer aka God" set of reasoning and logic better than the Modern Nerds a millennium and more ago, while Simulationism is an indulgence of Gnosticism, which is anti-life for additional reasons all their own.

I do like the way you formulated your fundamental assertion of Simulation = Substrate. A useful restatement of or corollary to the Incompleteness Theorem, and a fast way to refute those middle aged Militant New Atheists stuck in the reverie of their adolescence. Kudos, and my thanks.