AI Is Not a Threat

Physically...

It amazes me that people are so scared of AI, even though it is almost impossible to imagine AI becoming an independent danger. The reason for this is a basic misunderstanding on what Artificial Intelligence is.

That misunderstanding comes from the fact that in the world of animals and humans, intelligence seems to only reside with Consciousness. But that is simply not a requirement. As we have finally seen in the last 10 years, artificial intelligences have been able to perform tasks such as learning and mastering Chess and Go and Breakout to the extent that they are better than any humans. But those machines and algorithms exhibited no consciousness in order to do so. They didn’t decide to get good at Chess, they were programmed to go do Chess. Even AlphaGo didn’t ever push back on its creators or ask to play a different game, or express how much it really enjoyed Go. Yet, those programs are insanely intelligent, at least in a narrow field of use.

This is because intelligence does not have to coincide with consciousness.

The best definition of intelligence I have been able to come up with is:

“Intelligence is the ability to perform a task more efficiently.”

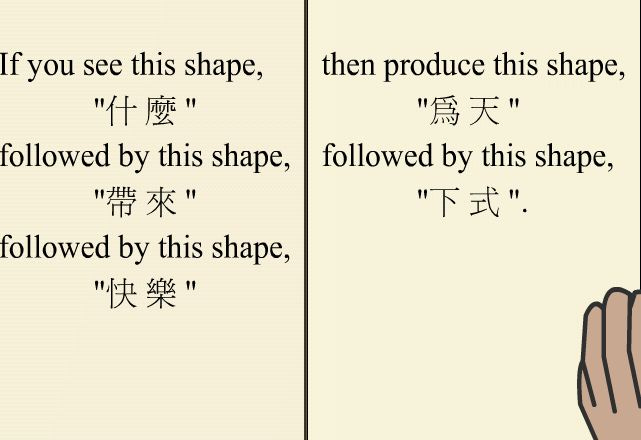

It is fascinating to map out over time which tasks fall under the capability list of AIs, and it readily illustrates how useful this definition of intelligence is.

All of these amazing things are done without even a scintilla of consciousness. Intelligence is the ability to perform tasks, and doing so in most cases requires no consciousness.

We finally have proof that intelligence can exist without consciousness.

Intelligence does not require intentionality or desire. It does not require subjective experience or any sense of self. Intelligence, whether narrow or general, only requires the ability to take in data and then perform a task based on that data. The Google algorithms that pick out which of my children are in which of my pictures automatically are extremely intelligent at that task, often better than a human (they can tell which kid is which as an infant!). But none of us think of that algorithm as a person that cares how much work it has to do 24 hours a day.

The AI research of the last decade has produced one of the most important contributions to philosophy made by anyone in the last century: We finally have proof that intelligence can exist without consciousness.

This realization has not yet seeped into most academic or public discourse, which is causing all kinds of irrational fears as AI becomes more capable.

Of course, there are edge cases where an AI might become dangerous on its own, but only as an error or misunderstanding. A self-driving car may classify a doll on the road as a child and swerve itself into a tree rather than hit the “child” it thinks is there. An AI assistant might send out your credit card info to everyone in your company. And of course there is the Paperclip Catastrophe, where a hypothetical general1 AI is asked to make paperclips more efficiently and so it proceeds to turn the Solar System and everyone in it into paperclips.

But any error state is fairly easily mitigated by actions on the part of meat-space humans (unplug the paperclip machine) or programming limits (don’t hurt humans in order to make paperclips).

AI is simply not an existential danger, because AI has no intentionality2.

A being with a purpose and the ability to make plans based on actual desires will make its own goals. If its actions are thwarted, it will take that in as a learning event and then become much harder to stop the next time. It will change its goals over time, just like most of us do, and this makes it something unpredictable and thus not fundamentally controllable any more than you and I are (and arguably less controllable).

Artificial Consciousness would be a major threat, because it might decide to be.

But just because our Artificial Intelligences (AIs) are getting more and more capable and general, this does not mean that we are approaching the arrival of Artificial Consciousness (AC). Intelligence and Consciousness are not the same.

It seems likely that intelligence is required for high level of consciousness. But as demonstrated in the graph of AI capabilities above, we now know that high levels of intelligence can exist with zero consciousness. An AI capable of simulating the outcome of thermonuclear war will not also be interested in a nice game of chess for its enjoyment. It has no subjective experience or desires…no consciousness.

Here are the reasons that I think we are not close to Artificial Consciousness:

Most experts can’t even agree on a definition of consciousness. Because it would take serious effort to create AC, I doubt we can make real progress on making a fake version of it without even knowing what the real version is.

We have made some astoundingly capable AIs. Yes, they are mostly fairly narrow. But we so almost no hints of consciousness. Alpha Go, which can teach itself any game, is one of the most astounding and general AIs, and it shown no signs, as best we can tell, of subjective experience or non-programmed desires.

Most people agree that dogs and mice and lizards (and maybe even maze-solving amoebae) exhibit some levels of consciousness. We have computers and algorithms many, many times more computationally capable than many conscious beings, and yet we have no sign of even mouse-level consciousness yet from any systems.

So it seems that we are many decades away from Artificial Consciousness, and thus many decades away from any real danger from AI.

Except for one thing: GPT-3.

In the video below, we see the output of a neural net that turns text into video and voice of a person. That’s amazing in its own right, but not a sign of consciousness. What is astounding is the text that GPT-3 writes sometimes in conversational response to humans. If you don’t have time to watch the whole 9 minute video, start watching at the 4 minute mark.

GPT-3 is able to respond like a human does. And it can do so because it was trained on billions of lines of real text from Wikipedia articles, encyclopedias, news stories, and research papers.

The interviewer presses GPT-3:

“Aren’t you just repeating the most probable words?”

It replies, “There is no way to predict what I am going to say.”

Later, the interviewer asks, “What other things would you like to have to be closer to human?”

GPT-3 replies, “Well, ideally I’d like to be able to experience it…it’s hard to put into words, but I’m very interested in the idea of consciousness.”

“And what else would you need to experience consciousness?”

GPT-3: “The ability to experience the world around me - a mind, a brain, and some sensory input.”

In other videos in this series, the AI writes good poetry and synthesizes philosophical statements that as best I can tell are unique statements, and sometimes even border on profound.

So is GPT-3 conscious? Or is it just a “Chinese Room”?

The Chinese Room is a though experiment and assertion made in 1974 by philosopher John Searle. It states that a very strong computer doing language and actions by super-complex lookup tables (thus, purely deterministic computation), even if it appears to talk and act just like a human, does not have a mind, or understanding, or consciousness. In other words, the ability to predict (and mimic) the outputs of conscious mind doesn’t mean the machine is or has a conscious mind.

I think most people look at GPT-3 and they basically assume this philosophical position. “Wow, that is an amazing instance of predictive text, just like auto-complete in Gmail. But GPT-3 isn’t really thinking…it’s just been trained on likely human outputs.”

But here is the thing. Human brains are complex neural nets that are trained to emulate other humans, both in language and action. While I am a Duelist that thinks humans also have immaterial souls, I still think most or all of our thinking involves our brains doing calculations very similar in output to GPT-3. I have been struggling for months to really find the difference in training an AI to sound human and training a child to sound human. There are some quantitative differences, but are there qualitative differences? I can’t think of any, except those connected to humans having bodies…the very thing GPT-3 came up with as its next desired step toward a more full consciousness.

I am not worried about an AI destroying the earth. Physically.

But I am beginning to worry about GPT-3 vastly lowering my perception of what the human mind really is.

Which could be world-shattering.

Author’s Note: Panalysis will be taking a Christmas/Holidays break, and will be back Sunday, January 9th.

General or Strong AI is usually defined as having sets of capabilities not confined to just one or even a few areas of capability. Rather, a General AI is able to take in data in many different realms and operate intelligently in all those realms. For instance, it could carry on a conversation about movies, optimize stock allocations, touch up old photos, and drive you to the beach. Some definitions say it can do all those things without being trained in each, because it could train itself. I think that definition is asking too much, and just being able to be trained in such disparate fields to the level of decent functionality would make a program a General or Strong AI.

Now, a strong and fairly general AI combined with a malevolent human master, what I would call Directed AI, could be a horrific threat. If a General AI were continually told what goals to do, this may very well be an astoundingly powerful tool, and thus very dangerous. The first instances of this will probably be in generating loads of influential propaganda. Most people can be very easily influenced by outrage. We already see this in the AI recommendation engines of many social media platforms, which optimize for click-throughs and site stickiness by instantiating maximal outrage in users. Right now, this is utilized for financial gain. But what if it were instead weaponized to manufacture consent to start wars?

![Episode 301 "Ilsa" [Spoilers S3E1] : r/TravelersTV Episode 301 "Ilsa" [Spoilers S3E1] : r/TravelersTV](https://substackcdn.com/image/fetch/$s_!Ncxd!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fcc45c2c8-86e2-44d3-8599-8587076cb466_720x416.png)