The Biggest Little Physics Experiment

Can we finally understand gravity?

As discussed earlier, fundamental physics has been stagnating for almost 50 years. Not since 1973 has a new theoretical prediction been made that has later proven out to be experimentally proven true.

But there is an experiment that can be done with today’s technology that might transform how we think of space, time, and information:

We can try to weigh information.

It is possible that information is a fundamental contributor to reality, and not just an emergent human construction. If this is the case, then the most likely way that information would affect reality is that it would bend spacetime. It would have extra gravity.

What Is Information?

“Information” is a term used in many different ways, so we need to define it carefully here. We will take a very short dive into Information Theory in order to get everyone on the same, very important page. You can do this. I’ll pay you in puggles.

Claude Shannon, widely considered the father of Information Theory, gave us a very robust definition of information, and it is surprising. Shannon was looking at how information is sent through communication channels and then recovered at the other end. He realized that if the channel has a “normal” state of, say, a voltage level of 1 volt, then this “normal” state doesn’t convey much information. In the same way, if the channel is noisy, then that white noise doesn’t tell us much, even though its voltage value is changing rapidly (but randomly) over time. The big leap that Shannon made in information theory is that surprises are what conveys information.

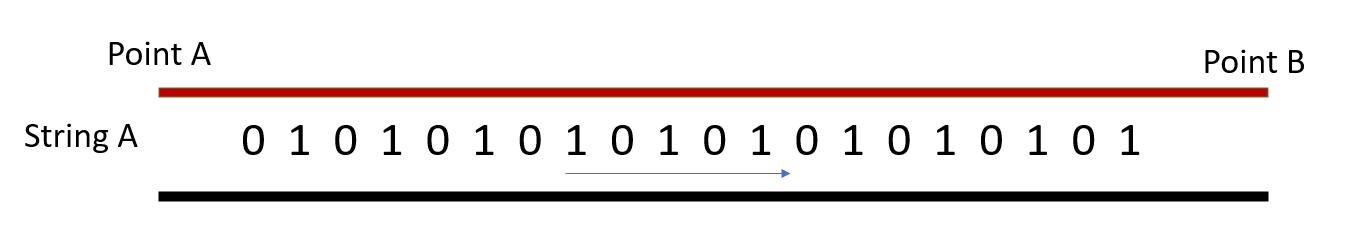

Let’s look at channels of information. In this context, “channel” just means any way of conveying a signal. Let’s imagine a pair of wires in a cable that stretches a mile from Point A to Point B. We can put electrical signals on that cable. Let’s say that the receiving end is Point B.

If the channel is almost always kept at 1 volt, then the way that you convey information across the channel is to make it deviate from 1 volt. You could send morse code by taking the voltage down to 0 volts briefly in long or short pulses. For the noisy channel, you could send signals that appear stronger than the noise. These 0 volt pulses or pulses that leap out above the noise would be “surprising,” in that they are not the normal state of the channel when it has no intentional information in the channel.

Shannon actually created a mathematical framework for measuring how much information is in a channel: The amount of information in a channel is the binary (base 2) logarithm1 of the amount of surprising information.

I bet you haven’t seen the word “surprising” in a mathematical definition before. Something is surprising if it is unlikely. So to put this in a mathematical framework, we have to define “unlikeliness.” This is pretty easy: We just define a probability p(E) that is the probability of a certain event happening, and then invert it. Take the base-2 logarithm of that (log₂), and it tells you the amount of information it takes to describe that event.

In this equation, p(E) is the probability of you seeing a certain event E, and I(E) is the information conveyed by the fact that you did see that event E.

You made it this far. You get a puggle:

I have a hard time understanding equations sometimes, until I use them. Let’s try that here. If we have that original information channel that’s always set at 1, then are you surprised if you measure the output of that channel and see a 1? No, of course not. Because the probability p(E) of you seeing a ‘1’ in a channel that’s always a ‘1’ is a probability p(E) of 100%, or 100. So how much surprise, how much information did you get from viewing a ‘1’? Using the equation:

I(E) = log₂ (1/1), because p(E) = 1

And because the log(1/1) = log(1), we have:

I(E) = log₂ (1), which is zero.

Because the logarithm of 1 is always zero.2

So how much surprising information did we get by viewing a ‘1’ when he channel is always a ‘1’? Zero. We learned absolutely nothing.

Yeah, I know. Maybe that’s a little dry. We can moisten this up by analyzing some more streams of data that we might find in a more normal channel. Let’s say that a channel could equally be a 1 or a 0, just like most digital wires, like one of the pairs of wires you might find in an ethernet cable. Let’s say that we see the following information:

String A: 01010101010101010101

It’s just alternating 0 and 1, repeated ten times. As you can guess, once the pattern is figured out, there are few surprises. In fact, only the pattern (two bits) and the length (10 units) is a surprise. One could argue that the number 10 takes 4 bits to describe, and the pattern takes two bits. So it takes 6 bits to describe this. I’m done with equations, but if we took the log of the probabilities, we would get close to 6 bits of information to describe this string, even though it’s comprised of 20 bits.

Now let’s look at another string:

String B: 00101001101101010010

It’s 20 bits long, but there is no discernible pattern. So every bit is a surprise. So we really have 18, 19, or 20 bits of surprise, depending on how far down the pattern-finding rabbit hole you are willing to go. But as you can see, it is a non-patterned array of bits. String B contains a little more than three times more information than String A, because String B contains about three times more surprise than A.

You’ve earned another puggle.

So What?

So now you know enough to be informationally dangerous. The more information it takes to describe a system, the more entropy it contains (I talk more about what entropy is below). High entropy systems are messy and take a ton of information to describe. A billion atoms of DNA, with all their encoded information, have WAY more entropy than a perfect single crystal of a billion atoms, because the crystalline pattern repeats in 3D just like the 010101… of String A.

Now we come to the experiment.

Hypothesis: Information itself is a fundamental parameter of the universe. It can manifest by very weakly deforming spacetime when a lot of information is crammed into a small space. In other words, information can cause gravity.

Test: We want to cram as much information as possible into the smallest possible region of space using current technology and see if it makes gravity.

Method: The best way that I have figured out how to do this is to create a chunk of matter that uses the configuration of that matter to write information on it. We do this all the time with hard drives and CDs and even optical storage in crystals. We accomplish this writing of information by shifting the state of matter at a fairly small scale. For instance, in a disk hard drive, we magnetize tiny regions of magnetic material in the “up” or “down” position, and each region counts as a 1 or a 0.

Specifically, I propose to write onto a lightweight beryllium or carbon crystal, such as diamond, in 3D. Using intersecting lasers, we can induce very localized tiny electric field concentrations, and those can be used to make tiny modifications to the atoms in the crystal. If we can use pulsed lasers at very high frequencies, then it is possible to write the data at almost the atomic level.

This research got the defect size down to less than 40nm in diamond, which is only a few hundred carbon atoms across.

Let’s postulate that we can get a defect rate (or modify individual electron spins) such that we can get 1 bit of information written for every 4 atoms in a crystal, and imagine that we can do so in 3D.

So if we had 1 cubic centimeter of diamond material, that would be 3.51 grams of diamond, which is about 1.78 x 10^23 atoms.3 If we had 1 bit of data encoded for every 4 atoms, that would mean we had 4.45 x 10^22 bits, which is half a million exabits, or about half a billion terabytes of data. In something the size of a sugar cube.

But as we learned above, bits are not the same as information.

If I just wrote all zeroes, that would be a lot of bits, but the entropy, the actual Shannon Information content of that crystal would be very low. The same is true if I wrote alternating 1s and 0s, or even if I just used binary to count from 0 to 100 and then repeated that bajillions of times.

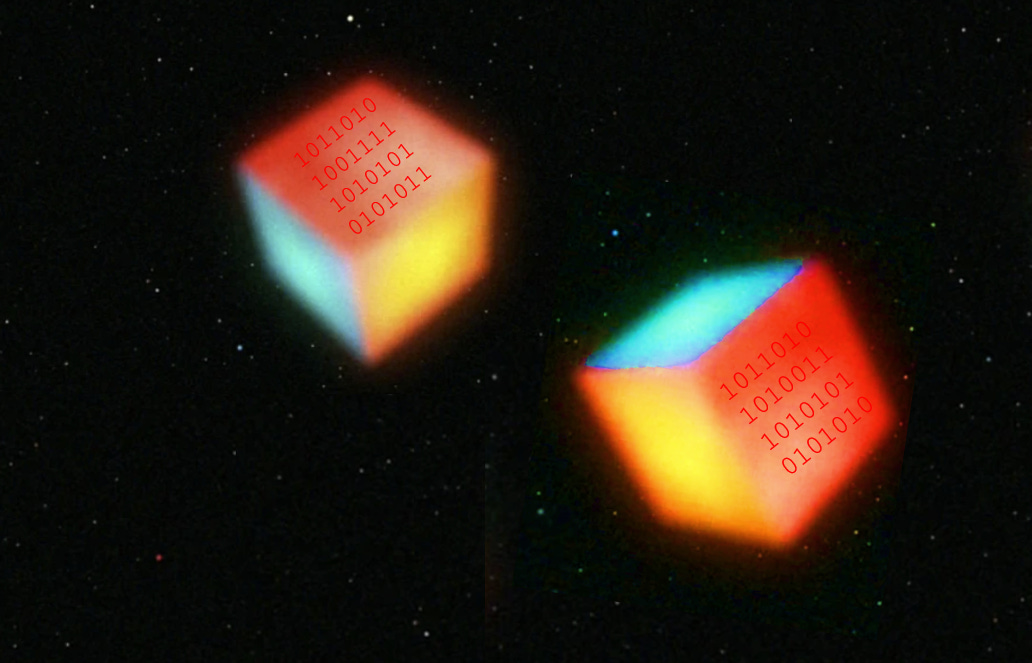

But I want to build two of these cubes:

Cube #1 is the low information cube. Let’s say that what is encoded in it is a repeated string in binary of counting from 0 to 7 over and over and over. The total bits may be almost a million exabits, but the amount of information would be less than 1,000 bits of Shannon information, of informational entropy.

Cube #2 is the high information cube. I will put the digits of pi into that cube. The digits of pi are the perfect example of maximal informational entropy, because there is no consistent pattern. We can know the first billion digits of pi, but we have no idea what the billionth + 1 digit is going to be until we calculate it exhaustively. We will be using way more than a billion digits of pi. The Shannon information of this cube will be very near half a million exabits, or about 70,000 quintillion digits of pi.

Remember, the hypothesis is that a collection of high information entropy weighs more than a collection of low information entropy. We have to have made the cubes with the same number of atoms, and we have to have similar bonding energy distributed throughout the bonds in each crystal (because added energy means a tiny bit of added mass). We also have to have a very precise scale or way of measuring weight differences. Because if information had a strong impact on weight, we would have noticed by now. So we are looking for a very small difference in weight, maybe a nanogram or a microgram if we are lucky.

We can have the two cubes be transported far into space and have each be placed a foot from a 1 cubic foot iron sphere, and see how long it takes for them to touch (would be on the order of a month or so). We could create a metastable 3 body system of cubes orbiting very slowly and closely in a special pattern, and look for the imbalance of one cube being a tiny bit heavier (we may want to make them spheres instead of cubes for gravitational orbit simplicity).

We could even just make a really nice scale inside a very hard vacuum here on Earth.

What Would It Mean?

What would it mean if we did see a significant difference in weight? Well, it would show that gravity itself might be entropic. Erik Verlinde postulated and somewhat popularized the following concept, which I am greatly simplifying because honestly, you’ve already endured enough abstract math for one article:

Matter in its normal configuration represents a form of informational entropy.

The universe, since the Big Bang, has tended toward higher entropy.

Entropy goes up when matter pieces come together. Because the universe is always trying to increase entropy, the universe basically draws matter together so that entropy is increased. This very weak force is gravity. I am going to elaborate here a little bit.

Entropy is basically a measure of how much information you can write into a chunk of matter without changing how it looks or feels at the macroscopic scale. You basically count up all the different microscopic arrangements that the tiny pieces of matter could take without changing the whole piece from, say, charcoal to diamond. The number of those little states is basically the entropy of the chunk of matter.

The entropy of each chunk goes up exponentially, not additively, with the number of particles it contains. A chunk of matter with 700 particles will have a much, much larger entropy than two separate chunks with 350 particles each. So therefore, when two chunks of matter merge, the entropy pretty much always goes way up.4

The Universe likes higher entropy, and so this might be why chunks of matter are drawn to each other. Don’t ask me why it likes higher entropy. Probably because it started with incredibly and inexplicably low entropy…? But the hypothesis is that this desire for lower entropy is the reason gravity exists.

This is not a contradiction of Einstein’s General Relativity. Einstein taught us that matter curves spacetime, and curved spacetime draws in matter. It doesn’t really give an explanation of why matter curves spacetime…just how. (It does explain why matter falls into curved spacetime…the curving exchanges flows of time for flows of space that drag matter along.)5

But now perhaps you understand why I designed this data cube comparison experiment.

All matter represents information just by existing. Even the “low data” cube I designed above actually carries a TON of information, in that information is required to describe the presence of its atoms. But the extra Shannon Information carried by the “high data” cube might set it apart in how the high data cube interacts with the universe. It has normal Atom Information and also a lot of Shannon Information.

If that makes it “weigh” more, then it indicates that Verlinde very well might be right about Entropic Gravity, and it shows that information is fundamental to the way the Universe works.

Which would be the biggest physics breakthrough of the century.

I would be very drawn to that information.

A base 2 logarithm just means this: If you have a number, say 8, then the log₂ of 8 is the exponent you put on the 2 in order to get 8. Eight is two cubed, meaning 8 = 2³. So log₂(8) = 3. Log₂(32) = 5, because 2 x 2 x 2 x 2 x 2 = 32.

Log(1) = 0 because two to the zero power is 1. Any number to the zero power is 1. It’s a rule of exponents.

6.023 x 10^23 (1 mole of) atoms times 3.51 grams divided by 12 grams per mole

The temperature of the chunks and the way the newer, bigger chunk has its tiny pieces stuck together (or not) also matters, so there are super hard to make exceptions to this statement.

Or at least, that’s one way to look at it. See the linked article for a fuller explanation.

Joshua! Your writing is incredible, it's some of the most rigorous first-principled thinking I've come across (and I know how much of a buzzword "first principles" has become). Reminds me of Tim Urban (who I also love), but with a different writing style.

Are you on Twitter? I can't find you anywhere online, but I've been avidly consuming your past content.